The Future of Pulp & Paper Manufacturing

The pulp and paper industry is one of the largest industrial sectors in the world. Over the past two decades, it has experienced considerable change, including digital technology advancements, environmental factors, rising energy and labor costs, sustainability objectives, and more.

This post discusses these changes as well as their effects on the sector’s future prospects.

Digital Transformation for Pulp and Paper

The pulp and paper industry has undergone several digital transformations, with mills adopting new technologies to improve efficiency and reduce their environmental impact. For instance, many mills have adopted smart sensors that monitor production processes and help optimize performance.

Automation has also played a role in the industry’s modernization; some mills have replaced workers with robots to cut costs and improve safety.

In addition, many mills have adopted digital technologies to improve their communication with customers and suppliers. This has allowed them to streamline their operations and better meet the needs of their stakeholders.

Ultimately, the goal of these digital transformations is to make the pulp and paper industry more sustainable. By reducing energy consumption and emissions, mills can become more environmentally friendly and protect the natural resources that they rely on. By embracing new technologies, the pulp and paper industry can continue to thrive into the future.

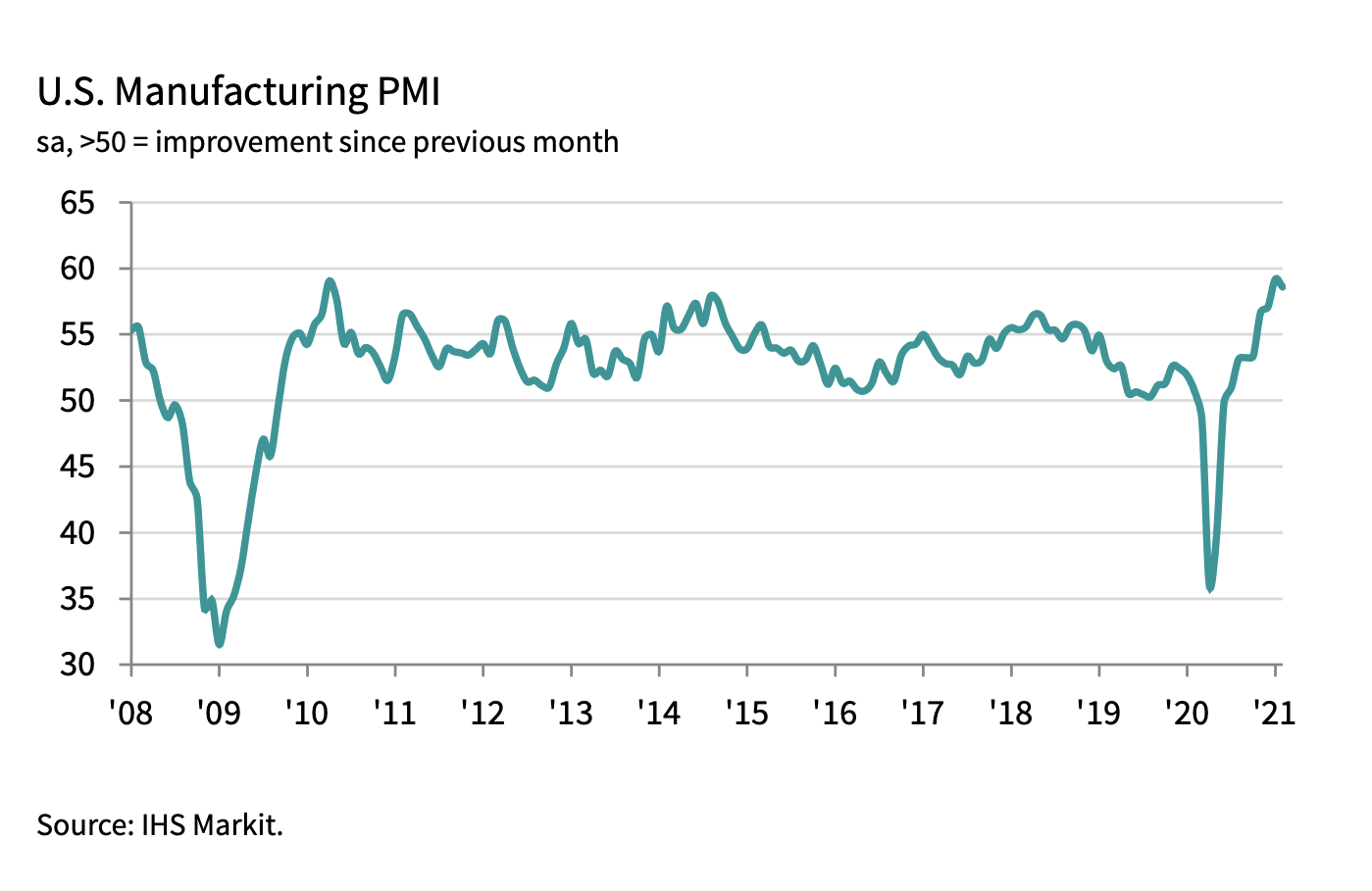

Pulp and Paper Manufacturing: Rising Energy and Labor Costs

The pulp and paper industry has also been affected by rising energy costs. The cost of pulp, one of the largest expenses for mills, is highly sensitive to oil prices; when crude falls (as it did from 2014 through 2016), pulp prices tend to decrease as well.

Many mills have had trouble securing natural gas supplies on favorable terms due to the strong demand for this commodity in North America. As a result, firms’ prospects have diverged across regions and product segments.

Mills in Europe, China and India also face challenges with rising labor costs and increased competition from South Korean conglomerates known as chaebols. While these factors will likely hold Chinese pulp production back somewhat, India is expected to see strong growth in the coming years as it ramps up its own pulp manufacturing.

Sustainability in Pulp and Paper Manufacturing

Environmental factors have been another key driver of change in the pulp and paper sector. Mills are increasingly being forced to comply with stricter regulations governing emissions, effluents, and waste management.

The pulp and paper manufacturing industry is a vital part of the global economy, but it also has a significant environmental impact.

In order to be sustainable, the pulp and paper industry must reduce its environmental footprint while still meeting the needs of consumers and businesses.

In particular, concerns over climate change have led companies to seek ways to reduce their carbon footprint. This has included measures such as installing renewable energy sources at mills and investing in forest conservation initiatives.

There are several ways to make pulp and paper more sustainable. One way is to use recycled materials in the manufacturing process. Another way is to use energy-efficient equipment and processes. And finally, companies can work to reduce their overall environmental impact by implementing responsible management practices.

By making small changes, pulp and paper manufacturers can help ensure that this important industry remains environmentally friendly for years to come.

Smart Manufacturing and Autonomous Control for Pulp and Paper Manufacturing

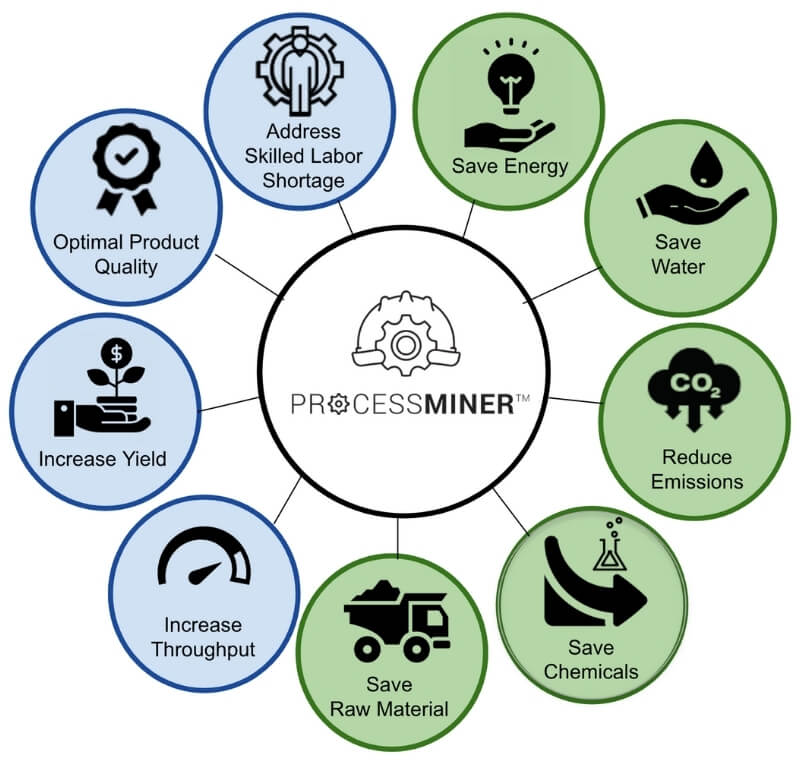

Critical to optimizing the performance of plant operations and reducing quality variations in pulp and paper manufacturing is proactive and accurate process control. Smart technology is being adopted by the pulp, paper, and packaging industries; this digital transformation in manufacturing reduces the consumption of raw materials, and process variability, drives down costs, increases throughput, and gains a competitive edge with customers.

The ProcessMiner AI-enabled platform delivers process improvement recommendations and optimal control parameters in real-time to your pulp and paper production line. Through our predictive machine learning and artificial intelligence systems, online recommendations are sent for autonomous proactive control. Our platform ensures reductions in cost, scrap, and defects commonly encountered in pulp and paper manufacturing processes.

Learn how the ProcessMiner platform uses real-time artificial intelligence to help reduce energy consumption, raw materials, chemical usage and cost. See our quick “How it Works” video.